If we talk about the current scenario, security teams are facing escalating pressure to protect systems from unpredictable threats.

What makes it worse is that most of these threats are backed by artificial intelligence, leaving standard tools failing to catch up. But,

Many businesses are trying to close these gaps by exploring new methods that offer speed and precision. One such approach gaining momentum is the use of generative AI in cybersecurity.

The shift is seen in the numbers. A 2024 report by MarkerResearch.biz estimates the generative AI in security market will reach USD 12 billion by 2033 from USD 1.2 billion in 2023.

Let’s make it clear for you. Keep reading the blog to understand what generative AI is for cybersecurity, its use cases, its benefits, risks & challenges, best practices, and future trends.

What Is Generative AI In Cybersecurity?

Generative AI in cybersecurity refers to the use of advanced artificial intelligence models that generate new content, make predictions, and run simulations to detect and respond to cybersecurity threats. It allows systems to test threats in advance and handles routine activities and tasks without manual input.

In layman’s language, generative AI in cybersecurity is a smart tool used to predict and stop cyberattacks. No doubt, generative AI improves defense strategies, but the same technology can also be misused by attackers to develop more complex and advanced threats.

Example: Let’s say you are a cybersecurity firm that manually drafts response playbooks after the analyses of threats across client systems. With the integrations of generative AI into your workflow, the system auto generates customized response plans in minutes. It helps you save hours of analyst effort and improves response time during live incidents.

Even after understanding the role of generative AI in cybersecurity, many confuse generative AI with traditional AI. Let’s differentiate with a complete overview.

Generative AI vs Traditional AI in Cybersecurity

Here is how traditional AI vs generative AI in cybersecurity compares across different factors.

| Factors | Traditional AI | Generative AI |

|---|---|---|

| Threat Handling | Identifies threats based on known patterns | Simulates and prepares for unknown or new patterns |

| Automation | Executes tasks based on fixed rules | Builds dynamic responses and attack scenarios |

| Training Data | Uses historical logs and labeled datasets | Learns from real and synthetic data |

| Use Cases Focus | Intrusion detection and anomaly alerts | Phishing simulation and automated patch creation |

| Approach to Security | Reactive: Flags known issues | Proactive: Prepares systems for evolving and zero-day threats |

Identifying the difference gives your security team a strategic edge. Knowing GenAI is just the context. Now, let’s discuss the most relevant generative AI use cases in cybersecurity that are making an impact.

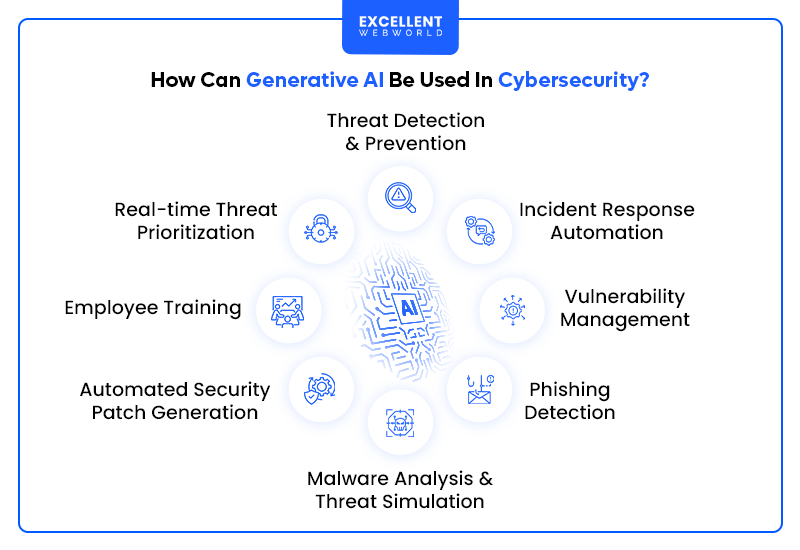

How Can Generative AI Be Used In Cybersecurity?

Generative AI is used in cybersecurity to optimize security operations by simulating threat scenarios and improving detection accuracy. The capability of generative AI makes it easy for businesses to address threats that standard tools fail to identify.

1. Threat Detection and Prevention

Use Case: To monitor system anomalies and respond promptly to previously unseen attacks.

GenAI simulates diverse attack patterns and generates threat vectors, which allows security systems to predict zero-day exploits. Even if such vulnerabilities are not present in the historical logs. It reacts in real-time, which improves responsiveness and precision in threat detection, minimizing the dependency on fixed rules.

Example: A finance company deploys a GenAI-driven system, showcasing how AI in Fintech is being used for more than just automation. This system helped them notice some strange login patterns that did not match past threat patterns. GenAI marked the activity as suspicious and raised an immediate red flag.

2. Incident Response Automation

Use Case: To optimize repetitive response workflows and minimize analyst burden.

As soon as a security alert is triggered, generative AI immediately steps in to generate customized response playbooks. It also simulates how to contain the threat and guides analysts to the next steps. Such automated support saves time and reduces errors in urgent situations.

Example: Let’s say a US-based hospital network integrates GenAI into its incident response system. In case a ransomware event occurs, the AI generates immediate containment actions, which help the network achieve a 60 percent faster incident response.

3. Vulnerability Management

Use Case: To prioritize high-risk vulnerabilities and recommend targeted patching strategies.

Instead of relying on the CVSS score, the GenAI model assesses environmental context and dependencies on the system to rank vulnerabilities. While GenAI automates prioritization and remediation, organizations often complement it with web application penetration testing to verify critical areas manually and uncover hidden flaws. The system also generates human-readable patching instructions or remediation scripts personalized to specific infrastructure.

Example: A cloud-based enterprise integrates GenAI to review all open vulnerabilities and receives a priority list with remediation steps for Kubernetes workloads, strengthening their cloud security This minimizes the patching backlog and lowers exposure time, ultimately helping organizations better manage their penetration testing cost by reducing the volume of simple vulnerabilities that human testers have to identify manually.

4. Advanced Phishing Detection

Use Case: To identify AI-generated phishing attempts and prepare internal teams with realistic simulations.

GenAI analyzes subtle anomalies in language, sender behavior, and link structure to identify phishing attacks. At the same time, these AI models generate phishing templates that security teams can use in simulations to improve employee awareness and response times.

Example: Imagine you suddenly receive a phishing email in your inbox. Having a generative AI tool quickly classifies such activity and generates a mitigation plan. The plan covers mail server filtering rules, user alert templates, and follow-up monitoring steps.

5. Malware Analysis and Threat Simulation

Use Case: To break down unknown malware variants and replicate their attack sequences.

Generative AI security provides your team a head start by breaking down the malicious code and recognizing unfamiliar payload patterns. This approach improves threat modelling precision and allows teams to prepare defense strategies against zero-day variants.

Example: A cybersecurity R&D lab feeds unknown malware into a GenAI model that reconstructs the malware’s logic and creates variations. The team identifies a variant that slips past their endpoint defenses. This prompts immediate improvements to their detection rules.

6. Automated Security Patches Generation

Use Case: To streamline patch development for documented bugs and security gaps.

GenAI trained on secure coding practices and exploit repositories assists developers by generating code-level fixes for specific vulnerabilities. This speeds up the remediation process and helps development teams fix security issues with precision.

Example: An AI development firm developing custom models integrates GenAI to detect security flaws in API endpoints. In case a vulnerability is flagged in a FastAPI microserver, the AI generates a secure patch as well as a regression test script. This approach helps the team to address the issue in 24 hours.

7. Employee Cybersecurity Training

Use Case: To design and create role-specific training scenarios that mirror current threat models.

Generative AI personalizes cybersecurity training by generating contextual challenges. This depends on the employee’s roles or the mistakes they have made earlier. Such an approach helps businesses to make training more dynamic and directly aligned with the practical threat conditions.

Example: A healthcare firm trains its staff using GenAI. It crafts fake emails like fake appointment changes or urgent login alerts. After each exercise, the system explains what signs are suspicious and why. Within a short duration, employees became 40% better at avoiding real phishing attempts, highlighting how AI in healthcare improves cybersecurity awareness.

8. Real-Time Threat Prioritization

Use Case: To support a security analyst in sorting and escalating severe security events.

First, generative AI collects signals from data points like user activities and system logs. Then, it connects these signals to identify different patterns or risks. And at last, it assigns a live risk score and recommends actions to minimize the threat. All of these help analysts prioritize what matters the most during busy alert periods.

Example: A media firm integrates GenAI to sort alerts from diverse platforms. What used to be 300+ low-priority alerts a day is now narrowed to just 5%. It eliminates the requirement for analysts to manually review hundreds of notifications.

While skimming the generative AI in cybersecurity use cases, you might have come across a few benefits. But to help you get a more clear view, here are the top benefits associated with generative AI serving cybersecurity.

5 Core Benefits of Applying Generative AI to Cybersecurity

Why should you integrate generative AI into your cybersecurity strategy? It improves threat detection precision, speeds up the incident response, and strengthens the defense to keep your systems secure from advanced cybersecurity threats. However, success depends on how well the technology is implemented. Many teams still make critical mistakes in gen AI development, rushing integration without proper alignment, which can lead to overlooked vulnerabilities or misfires in threat detection.

Let’s discuss each benefit in detail:

1. Improves Accuracy in Threat Detection

Standard tools miss what generative AI catches. GenAI scans a large number of datasets to detect early signs of threats and malware. Detection becomes more precise by creating threat variants that help validate and optimize the detection model. This early-stage enrichment helps security teams improve accuracy by catching misclassified or stealthy threats at a good pace.

2. Accelerates Incident Handling with AI Automation

Instead of waiting for human input, GenAI accelerates response by generating real-time response plans based on the nature of the breach. It reduces mental fatigue with the help of generating contextual playbooks and suggesting what to do next. This is an advantage in high-stakes situations where every second counts.

3. Strengthens Proactive Defense Mechanisms

GenAI is different from reactive models as it recreates attack vectors. The system allows security teams to identify and fix all the weaknesses before a real threat occurs. This approach leads to more advanced systems prepared for zero-day exploits and evolving threat strategies.

4. Minimizes Human Error in Cyber Operations

In routine cybersecurity operations, AI-generated scripts and reports reduce the chances of manual errors. Be it a configuration change or a patch fix, generative AI in security operations evaluates and refines these actions to ensure better accuracy than manual review.

5. Expands Security Infrastructure with Flexibility

Generative AI tools integrate well with the existing security systems and scale easily with the growing demands. These models adapt in real time, whether it’s adapting to a new type of data or personalizing defenses per business unit. Legacy tools rarely offer such flexibility.

Risks and Challenges of Using Generative AI in Cybersecurity

Without question, GenAI expands the potential of cybersecurity but it introduces risks that needs effective solutions for better protection. Check out the generative AI security risks and challenges, along with appropriate solutions.

1. Fails to Prevent Misuse by Attackers

There are chances that the same technology that strengthens cyber threats can be exploited to launch attacks. Cybercriminals are experts at striking in different ways, including crafting convincing phishing emails or even automating parts of malware generation. Such a dual-use nature makes containment a constant challenge.

Security teams must be responsible for evaluating generative outputs from a risk perspective rather than limiting their role to a defensive one. You can use mock attack exercises to understand how generative AI could be misused and also to strengthen weak spots. Also, you can integrate AI content detection tools into email security and identity verification systems.

2. Compromises Ethical and Privacy Expectations

Large generative AI models sometimes expose confidential data or mirror user data patterns too accurately. Such behavior issues violate ethical standards and regulatory expectations in case where private or sensitive data is used to generate content.

Businesses need to prioritize implementing strict data masking and access control mechanisms prior to training use. What you can do is you can regularly audit AI-generated output, along with the policies with defined acceptable use to support ethical use and regulatory compliance

3. Lacks Accuracy Due to Hallucinations

A generative AI system produces outputs that look accurate. But with close analysis, the outputs are sometimes factually incorrect or misleading. In cybersecurity, such inaccuracies lead to ineffective patch recommendations and alert fatigue from false positives.

To avoid this, all generative outputs must be validated by a person checking the entire process. You need to link a generative AI model with internal systems or retrieval mechanisms to reduce hallucinations and improve factual consistency.

4. Unable to Meet Compliance Requirements

Generative AI models are complex as well as come with explainability. This makes it difficult to comply with standards like GDPR, HIPAA, or ISO 270001. Lack of transparency in making decisions complicates auditing efforts.

You can explainability layers that track model behavior and reasoning. Make sure to keep documentation for every decision made by AI. If you are an enterprise, you should align GenAI deployments with a governance framework that is auditable by design.

Best Practices for Implementing Generative AI in Cybersecurity

Here are the best practices to follow when you are implementing generative AI into your cybersecurity environment.

1. Select Reliable and Transparent AI Platforms

Don’t just go for performance; transparency equally matters. You need to look for AI platforms that document how the model is trained and the logic behind the outputs. This helps avoid blind reliance on outputs and supports responsible deployment.

2. Audit Model Outputs for Accuracy and Bias

Even the smartest system can go wrong. Regularly reviewing AI’s responses helps catch false positives or unethical recommendations. You can set up a routine model evaluation process based on your specific goals to ensure the system is aligned with operational needs.

3. Integrate Human Oversight into AI Workflows

When paired with experienced human teams, AI in cybersecurity works best. You need to introduce checkpoints where analysts verify and approve AI-generated actions. This reduces the chances of errors and keeps AI aligned with context.

4. Educate Cybersecurity Teams on AI Usage

Make sure you are training teams to understand what AI does and where its limits are. Internal workshops, real-time simulations, and integrating internal learning tools help teams to use AI in an effective way.

To ensure effective AI adoption, collaborate with an experienced cybersecurity services provider to design AI-focused training programs and keep teams updated on evolving threat landscapes.

5. Comply with Data Privacy and Governance Standards

Before the deployment phase, make sure to verify that all data handling aligns with legal frameworks and your internal policies. This includes anonymizing data and tracking all AI activities for accountability. Clear governance ensures ethical use within the departments.

Future Trends and Predictions of Generative AI for Cybersecurity

Staying informed about generative AI trends in cybersecurity helps businesses anticipate risks and improve security measures as well. Here are the future trends that will improve cybersecurity with generative AI.

1. Moving Toward Predictive Threat Modeling

In the next years, GenAI is expected to improve the prediction of anticipated cyber threats through the study of detailed behavior patterns and simulated scenarios. This will make it easy for businesses to prepare defenses for the anticipated threats instead of reacting to past incidents. With early anticipation of vulnerabilities, you will stay one step ahead of attackers.

2. Accelerating AI-Led Security Orchestration

AI-led orchestration is evolving with AI’s growing role in automating detection, analysis, and response across security tools. This will reduce manual workload and accelerate incident handling. It will also help unify disparate security systems, which will improve communication and efficiency within different platforms.

3. Integrating into Zero Trust Frameworks

AI-driven adaptive security will consistently monitor user behavior and access attempts to provide dynamic security decisions. This is something that will support persistent verification and limit unauthorized access. Such integrations will also allow security systems to remain flexible regarding constant risk profile changes.

4. Driving Cross-Sector Collaboration in Cybersecurity

The ability of generative AI models to create anonymized threat artifacts will simplify collaboration and improve threat intelligence. This will collaboration between the sectors without the risk of sensitive data exposure.

5. Building Toward Autonomous Cyber Defense

Over time, the available security frameworks will be transformed into intelligent systems that will operate autonomously to identify and respond to cyber threats without any dependency. This evolution will be backed by generative AI. It will drive machines to mimic behaviors, react promptly, and adapt through real-time learning.

Make Generative AI Work for Your Security Goals

Every cybersecurity challenge is unique in itself, and so should be your approach to generative AI. Having a tailored strategy ensures AI value rather than just adding complexity. This is the reason we don’t just provide AI tools, but as a reliable generative AI development company, we partner with you to understand your specific security requirements and develop solutions that perfectly fit your environment.

Here is how we deliver value to you with personalized generative AI services.

When development is driven by cybersecurity needs, GenAI becomes a responsible co-pilot in defense. If you want to learn more about how AI in software development can support your security environment? Then, schedule a call with our generative AI experts and get clarity on the right approach to your security goals.

FAQs About Generative AI and Cybersecurity

Generative AI identifies the evolving threats and automates their detection within systems. It also mimics complex attack scenarios and generates custom response playbooks to guide faster in case of any incident. The technology helps security teams improve speed and accuracy across the operations.

Here are the generative AI applications in cybersecurity and cyber defense.

Cybersecurity uses different types of AI, including machine learning and deep learning. Also, it is equipped with generative models like Generative Adversarial Networks (GANs), Transformers, and LLMs. Each of these types addresses specific tasks like anomaly detection or response automation.

No, generative AI can support and improve human capabilities, but does not replace expertise. It processes large amounts of routine data with ease. As a result, security professionals can prioritize strategic planning and threat investigations.

Here is how cybersecurity AI functions to secure systems.

Article By

Paresh Sagar is the CEO of Excellent Webworld. He firmly believes in using technology to solve challenges. His dedication and attention to detail make him an expert in helping startups in different industries digitalize their businesses globally.